NeuS: Learning Neural Implicit Surfaces by Volume Rendering for Multi-view Reconstruction

Abstract

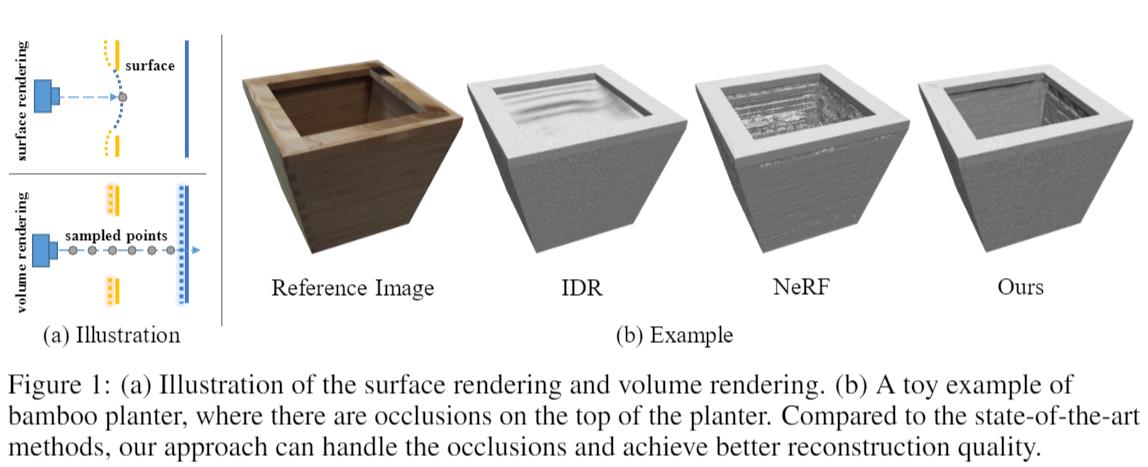

We present a novel neural surface reconstruction method, called NeuS (pronunciation: /nuːz/, same as "news"), for reconstructing objects and scenes with high fidelity from 2D image inputs. Existing neural surface reconstruction approaches, such as DVR [Niemeyer et al, 2020] and IDR [Yariv et al., 2020], require foreground mask as supervision, easily get trapped in local minima, and therefore struggle with the reconstruction of objects with severe self-occlusion or thin structures. Meanwhile, recent neural methods for novel view synthesis, such as NeRF [Mildenhall et al., 2020] and its variants, use volume rendering to produce a neural scene representation with robustness of optimization, even for highly complex objects. However, extracting high-quality surfaces from this learned implicit representation is difficult because there are not sufficient surface constraints in the representation. In NeuS, we propose to represent a surface as the zero-level set of a signed distance function (SDF) and develop a new volume rendering method to train a neural SDF representation. We observe that the conventional volume rendering method causes inherent geometric errors (i.e. bias) for surface reconstruction, and therefore propose a new formulation that is free of bias in the first order of approximation, thus leading to more accurate surface reconstruction even without the mask supervision. Experiments on the DTU dataset and the BlendedMVS dataset show that NeuS outperforms the state-of-the-arts in high-quality surface reconstruction, especially for objects and scenes with complex structures and self-occlusion.

Introduction

Given a set of posed 2D images of a 3D object, our goal is to reconstruct the surface of the object. The surface is represented by the zero-level set of an implicit signed distance function (SDF) encoded by a fully connected neural network (MLP). In order to learn the weights of this network, we developed a novel volume rendering method to render images from the implicit SDF and minimize the difference between the rendered images and the input images. This volume rendering approach ensures robust optimization in NeuS for reconstructing objects of complex structures.

Our method is able to accurately reconstruct thin structures, especially on the edges with abrupt depth changes.

Comparisons (w/o mask supervision)

Scan 69 from the DTU dataset

Statue from the BlendedMVS dataset

Comparisons (w/ mask supervision)

Scan 37 from the DTU dataset

Stone from the BlendedMVS dataset

More Results of NeuS (w/o mask supervision)

Scenes from the DTU dataset

Scenes from the BlendedMVS dataset

Citation

@article{wang2021neus,

title={NeuS: Learning Neural Implicit Surfaces by Volume Rendering for Multi-view Reconstruction},

author={Peng Wang and Lingjie Liu and Yuan Liu and Christian Theobalt and Taku Komura and Wenping Wang},

journal={NeurIPS},

year={2021}

}

Related Links

Acknowledgements

We thank Michael Oechsle for providing the results of UNISURF. Christian Theobalt was supported by ERC Consolidator Grant 770784. Lingjie Liu was supported by Lise Meitner Postdoctoral Fellowship.